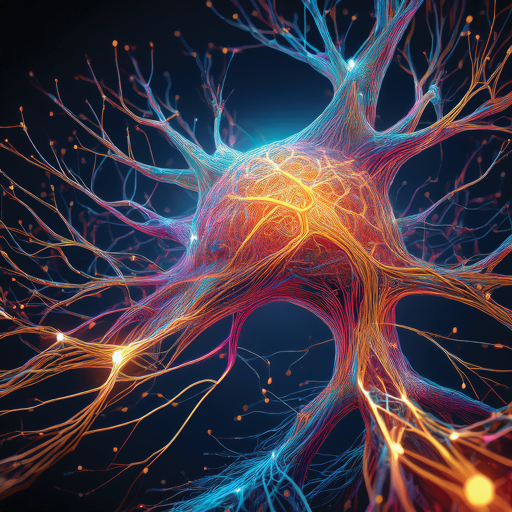

The Perceptron: A Fundamental Building Block of Neural Networks

Depending on the tasks, we select the most optimal and proven tools for creating certain projects. To achieve the desired result, three main parameters are taken into account: performance, security, stability. In the process of work, in most cases we use our own time-tested developments. This allows you to create projects of any complexity, as well as provide them with a long service life, thanks to regular updates. We have a fairly flexible approach to development, if the client has his own preferences in using technologies, we are ready to use these tools if this allows us to comply with the three basic development principles that we indicated above.

What is a Perceptron?

At its core, a perceptron is a type of artificial neuron—a computational model inspired by the biological neurons in the human brain. It is designed to perform binary classification tasks, distinguishing between two classes of input data.

The perceptron consists of:

- Input values (features): These are the data points or signals that the model receives.

- Weights: Each input is multiplied by a corresponding weight, which represents the strength or importance of that input.

- Bias: An additional constant input that helps shift the activation threshold.

- Activation function: Usually a step function that outputs either 0 or 1, indicating the class prediction.

How Does a Perceptron Work?

The perceptron computes a weighted sum of its inputs and passes this sum through an activation function. Formally, the output y is defined as:

y = 1 if ∑ w_i*x_i + b > 0

y = 0 otherwise

Here, "w_i" are the weights, "x_i" are the input features, and "b" is the bias term.

If the weighted sum exceeds zero (or another threshold), the perceptron outputs one class; otherwise, it outputs the other. This simple mechanism enables the perceptron to learn linear decision boundaries in the input space.

Training the Perceptron

Training a perceptron involves adjusting the weights and bias to correctly classify training examples. This is done using the perceptron learning algorithm, which iteratively updates the weights based on classification errors:

- Initialize weights and bias to small random values.

-

For each training example:

- Compute the predicted output.

- Update weights if the prediction is incorrect: wi -> wi + lr*(d - y)*x_i, where "lr" - learning rate, "d" is the desired output and "y" is the predicted output.

- Repeat until convergence or a maximum number of iterations is reached.

Limitations and Legacy

While the perceptron is influential, it has notable limitations. It can only solve problems where the data is linearly separable. For example, it cannot solve the XOR problem, where classes are not linearly separable.

However, the perceptron inspired the development of multilayer networks, such as the multilayer perceptron (MLP), which use multiple layers of neurons and nonlinear activation functions to overcome these limitations. These modern networks form the basis of deep learning models used today in computer vision, natural language processing, and many other domains.

Conclusion

The perceptron represents a crucial stepping stone in the evolution of artificial intelligence. Despite its simplicity, it embodies key ideas such as weighted input aggregation, threshold activation, and iterative learning. Understanding the perceptron provides valuable insight into the mechanics of neural networks and the principles behind modern machine learning techniques.